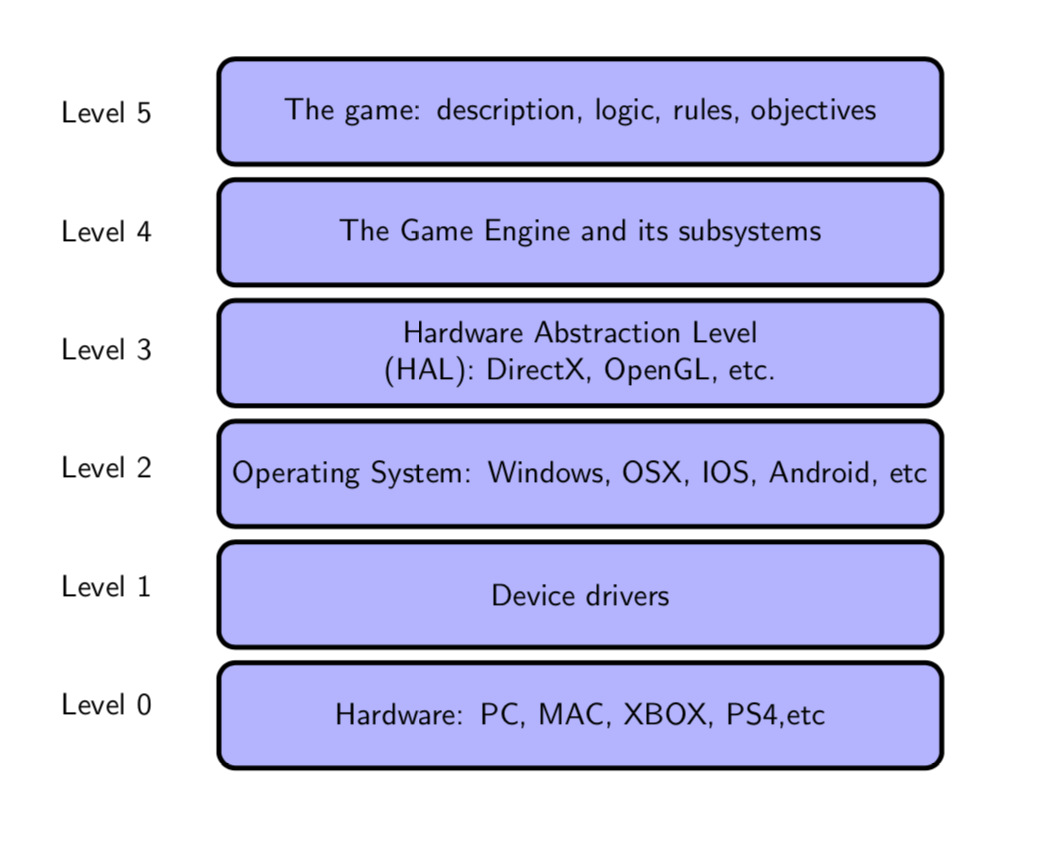

A useful tool to understand the architecture of modern platforms for the development of video games is the layered model. Complex systems are characterized by a set of components (even hundreds or thousands) that interact with each other. The layered model consists of dividing the system into various levels of abstraction: each level is made up of various components that carry out the actions within the same level and use the services offered by lower levels.

For example, the layered model of a computer represents functions by means of a hierarchy of levels of abstraction, from the application level to the basic hardware level: each function of a given level is implemented using services offered by lower levels, without worrying about technical details, often very complex, of the implementation of the underlying levels. For example, any arithmetic or logic operation provided in an application program is actually implemented by means of electronic circuits encoded in the computer CPU transistors, but these details are completely transparent to the programmer.

This modular subdivision allows to better understand the architecture and the functions of the system, and greatly facilitates the system maintenance activity.

In this article a general overview of the architecture and the main components of a video game development system is given.

For an in-depth study of the architecture of modern game engines the bibliography books are very useful[1][2][3].

1) Components of a modern video game system

The development of video games is a very complex activity and would be almost prohibitive without a modular architecture and the aid of efficient development environments. At present there is no recognized standard for the architecture of video game development systems. However, despite the differences, the various products on the market share a structure composed of the following levels of abstraction:

1.1) The game: description, logic, rules, objectives

A video game can be defined as a set of problem solving activities, aimed at achieving certain objectives, in compliance with precise rules. The development of a video game starts from an initial idea that passes through various phases of creativity: incubation, deepening, evaluation and detailed analysis. The detailed analysis leads to the development of the design document that describes the various aspects of the video game: rules, objectives, actors, resources, levels, sound, music, user interface, etc.

The design document is the input for the developer, who through the software framework associated with the game engine, can translate the initial idea into an actual game on the various platforms.

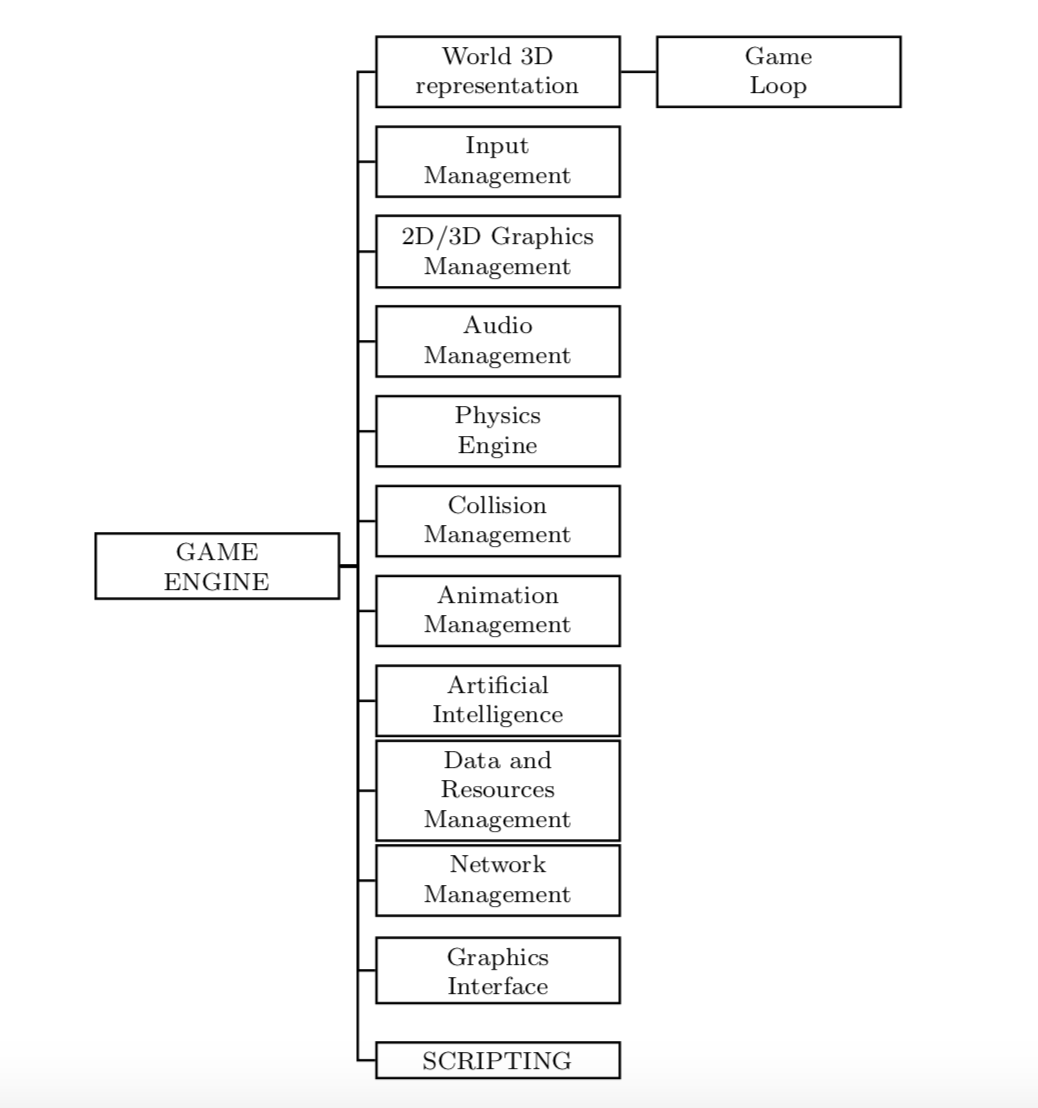

1.2) The game engine and its subsystems

Game engines provide an integrated environment composed of development tools and reusable software components, which allow one fast and simplified development.

Game engines allow you to abstract low-level hardware and software (graphics, physics, operating systems, etc.) from the details; the developer can concentrate more on the rules of the game and on the interaction between the player and the other subjects present in the game environment. For example, a programmer who has to draw a 3D character in a game scene does not have to worry about the complex operations that take place at the hardware level necessary to prepare the image in the buffer and send the pixels on the screen. Game engines support the use of modern programming languages (C ++, C#, Java, etc.) which allow the development of various web and multimedia applications, including video games. Examples of very successful engines are Unreal Engine and Unity3D.

Graphic engines have a modular architecture; the main subsystems are the following:

The representation of the 3D world and the game loop

The universe of the game is the 2D/3D environment in which the various objects live: characters, video cameras, cars, props, etc.

A fundamental function of the game engine is to provide an abstract model of the universe of the game and all the objects that are inside. For each object, the various properties and status must be recorded at all times.

Periodic updating of the status of the various objects is one of the fundamental tasks of the Game Loop. Basically the game loop in each cycle updates the status of the various objects (position, collisions, etc.) and graphically renders the objects (GameObjects) in the frame.

Each GameObject is composed of a set of components: the Transform object that records the position, the rotation and the scale, the graphic renderer, the physics, the script in which the logic is coded, etc.

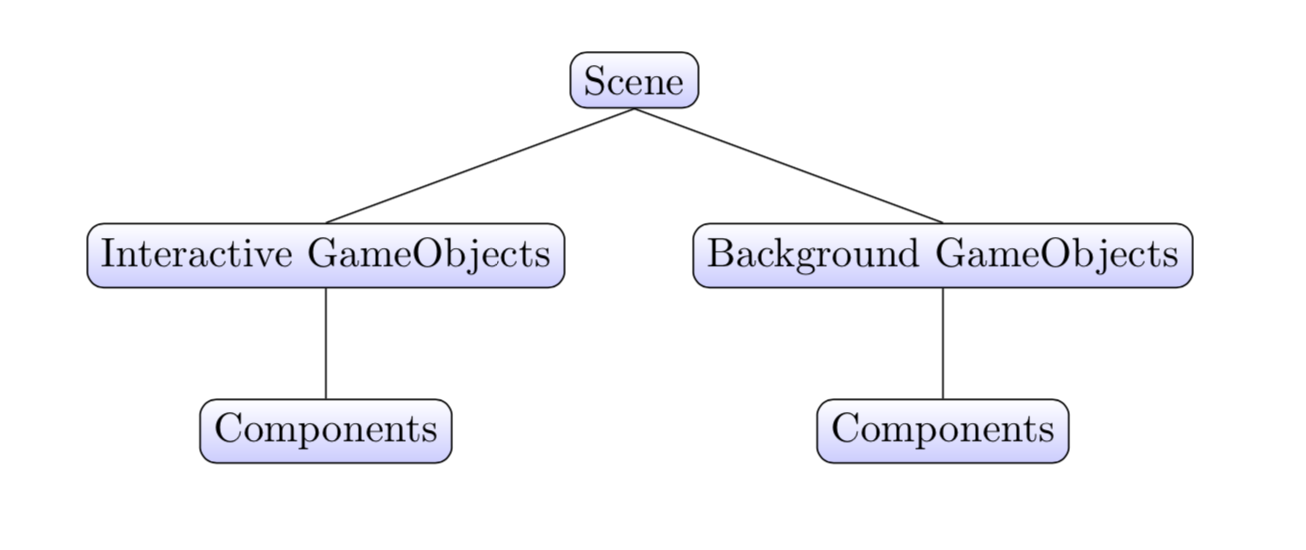

The scene represents a level in a game, coordinates and manages GameObjects and their attacked components (enemies, terrain, lights, video cameras, etc.). The structure of a scene can be of this type:

Some engines use a traditional class hierarchy to represent the various types of connected objects, through the inheritance mechanism to reuse code and functionality. Such architecture can be inefficient in the case of particularly complex games in which several exceptions must be handled. In such situations the approach based on component architecture (which favors class composition over inheritance) may be more useful.

Input management

The user interacts intensively with the game through the input devices: keyboard, mouse, joysticks, touchpad, etc. The engine must recognize the commands sent by the user and make the necessary updates on the data and properties of the objects involved. The input is managed in two fundamental ways: event management or polling management. The first mode provides for the possibility of intercepting the various events in real time (for example pressing a key on the keyboard) and performing the appropriate action. The polling technique consists in periodically querying the status of the objects (for example the mouse position on screen) and making the values available to the programmer for the appropriate processing.

2D/3D graphics management

The graphic engine must prepare and display on the screen images of the various objects and of the environments in which the game takes place. In 2D graphics, textures are used to form images on the scene. In 3D environments, textures are used to paint 3D objects.

To have a realistic simulation of reality a high frame update frequency is required, so that the action is carried out continuously without flicker effects.

Graphic engines use APIs that call popular software products such as OpenGL and Direct3D, which interface with the hardware of the various graphics cards. During each Game Loop cycle the objects are updated and redrawn on the screen.

3D games typically use assets created with specific products such as Blender, Maya, 3ds Max, while products like PhotoShop are typically used for 2D graphics. The assets created are then imported into the game project with the appropriate adaptations and format conversions. Once imported, objects can be added to the game and modified by creating realistic environments and actors.

The value generally used for the frame refresh rate (frame rate) is 30 fps (frames per second). This means that each frame remains on screen for about 0.0333 seconds before being updated with the next frame, therefore an input command takes this time before being executed and showing its effects. In some situations the frame rate may be even higher (for example 60 fps) for objects that move quickly; this allows for better responsiveness. Of course the video screen where the scene is displayed must be set up to manage the frame rate, otherwise you will not notice a difference using higher frame rates.

Audio management (audio engine)

Sound and music are an integral part of every game. The audio subsystem generates the background music and the sound effects of the game. Subsystem programs can use the CPU or dedicated processors and can be supported at a level of abstraction via APIs, such as Open-AL, Direct3D, etc. Sound effects are inserted into the 3D environment of the game and the volume can be adjusted according to the distance of your character relative to the sound.

Sound management is a complex procedure; to get more realism you can add frequency modulation and reverb effects to simulate the sound produced by the reflections on the walls.

Sound production must not interfere with the other functions of the game or create delays in update operations and graphics rendering. For this reason the sound should always be loaded into RAM memory, which is very fast, and not loaded from the disk, as it would cause delays. However RAM memory is limited and this increases the complexity of management. The algorithms should be able to keep in memory only the parts of sound necessary in a given situation of the game, releasing the unnecessary ones.

In the Unity environment there are two components to manage sound: an AudioListener that has the listening function and an AudioSource that generates sound. You can connect an AudioSource to each object in the game, assign a specific sound and through an associated script generate the sound using the Play() function. To generate the sound, audio assets are of course necessary in the various formats: .mp3, .wav etc.

Another important aspect to consider is the distinction between sounds in a 2D or 3D environment. The 2D sound volume is independent of the distance of the AudioListener. In the 3D environment the sound volume may vary depending on the distance between the audio source and the video camera to which the AudioListener is attached.

Physics engine

In modern video games, physics is very important. The physics engine is a subsystem that simulates the effects of gravity and other laws of classical physics (collisions between objects, reflection and refraction of light, fluid dynamics, etc.). The objects of the game scene behave as if they were material bodies immersed in a real environment in which the laws of physics apply.

The Unity 3D framework uses the PhysX physics engine to manage physical simulations. The physics engine can be controlled via the scripts associated with game objects. In this way we can determine the dynamic behavior of a vehicle, a projectile, etc.

Management of the physics of objects requires great processing power; it is therefore appropriate to limit the number of material objects that interact and use simple geometric shapes (e.g. the spherical shape).

Collision management

In many games the phenomenon of collision between two objects must be managed. The collision between two rigid bodies occurs when the distance between the two geometric shapes reaches a value below a given threshold, set by the developer based on the specific needs of the game. Collision detection is a necessary piece of information in different situations: to know if you are getting too close to a dangerous border or to a wall, if a fired bullet hits the target, etc.

One of the simplest methods to intercept the collision between two objects is to represent objects with a circular or spherical shape and intercept the moment in which the distance between the two centers is less than the sum of the two rays. The spherical shape makes it possible to detect the collision in a very simple way: just compare the distance between the two objects with the sum of the two rays.

Character animation

In every scene of a game there are various characters: humans, robots, animals, cars and so on. Among the elements to be considered, in addition to the characters, are also included light sources, colors, points of view, shadows, etc. The purpose of the animation subsystem is to simulate the movement of each element on the scene so that it appears as natural as possible.

The animation process is very complex, and has been perfected over the years. The movement is made possible through a sequence of images, with a frequency appropriate to the human brain (24 frames per second).

The currently most used technique is the skeletal animation; an articulated structure of the object is drawn, similar to a skeleton, through a tree structure of articulations, each represented by a transformation matrix that memorizes the position and the orientation.

The surface of the object is connected to the skeleton by the skinning technique. Skinning is a method used to coat the skeleton with a deformable mesh, which is able to adapt to the movements of the bones. To achieve greater realism, the aesthetic characteristics are also designed and defined through textures and shading.

Artificial Intelligence (AI)

Some form of artificial intelligence is often included in more complex games. The game becomes more interesting if the characters seem able to control their actions according to the situation. For the implementation of AI functions in the game you can either use special external libraries or algorithms provided by the game engine or you can even develop them within the project using the Scripting language (C++, C#, etc.).

The most important frameworks (Unity3D, Unreal Engine, etc.) provide products that let you define the actions and behaviors of the actors even through graphic decision tools, without having to write complicated lines of codes.

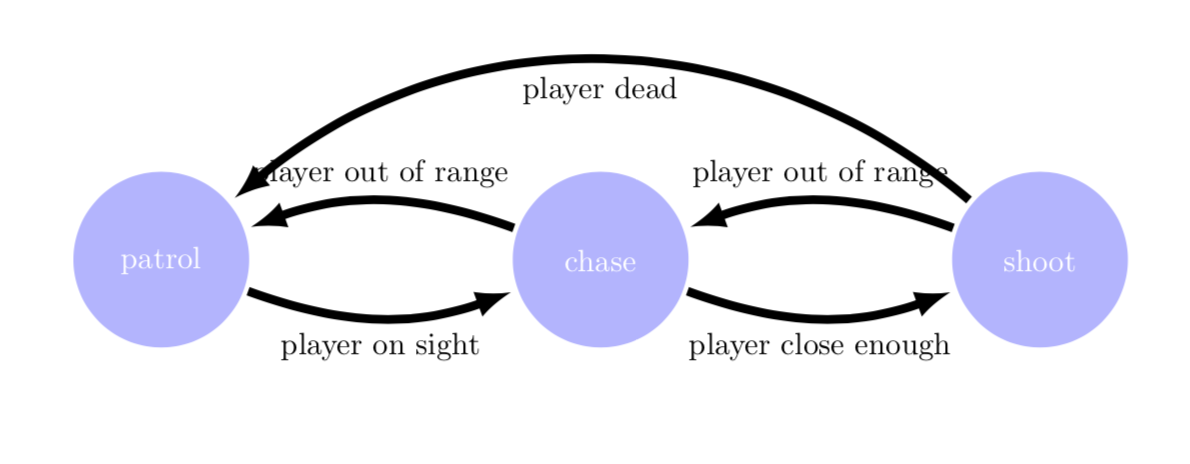

A typical situation is to control NPCs (non-player characters) so that their actions adapt to the context (in an intelligent way). The NPC character must respond to player’s actions. An algorithm widely used for this is based on the use of finite state machines (FSM). The various possible situations (states) are identified and for each one of them the appropriate actions are planned.

For example, to represent the actions of an NPC following the player, you can use the following graphic:

Another interesting problem is represented by the development of world navigation or path-finding algorithms, that is the search for the most efficient paths for agents. The protagonists of the games (characters, animals, vehicles) must move according to certain objectives and must be able to identify the most efficient routes from start to finish. Of course this requires knowing the local geography, walls, bridges, rivers, buildings, the position of other people and so on. One of the best path-finding algorithms is the algorithm A*.

Other forms of AI are neural networks and fuzzy logic, used to make decisions in conditions of uncertainty.

Resources management

Each game uses various types of resources, recorded on external archives, which must be loaded into memory at the appropriate time. The Resource Management subsystem maintains an inventory of all the resources used, manages the loading of the various assets in memory (levels, scenes, sprites, textures, audio, etc.) and must avoid problems with decreasing performance or system failures. The main objective is to make the resources available at the needed time, compatibly with the main memory available and the speed of data transfer with external storage media.

Another important aspect is the possibility of suspending the game at a certain point and being able to resume at a later stage from the same situation. The system must be able to save the status of the various objects at the time of interruption and restore the exact configuration at a later time. The user must have the possibility, through an appropriate menu, to be able to choose whether to restart the game from the interruption or to start again from the beginning.

Network management

Many games provide the possibility of having a plurality of players connected to the network.The screen can be divided into various components. The main architecture for multiplayer games is represented by the client/server model: each player is a “client” who communicates with a central computer, the “server”, where the game is actually executed. The fundamental problem is being able to replicate the state of the game on all the machines connected to the network, therefore keeping the various players all synchronized with the state of the server.

Among the factors necessary for the success of this type of games are the following:

- high speed and high reliability connection

- great CPU/GPU processing power of the devices

User Interface (GUI)

All video game engines have a subsystem that manages the graphical user interface: visual components, menus, buttons, dialogues, sliders, layouts, etc. A GUI is simply a list of texts or images that can be clicked by the user via the keyboard, mouse or gamepad.

Scripting

The logic of the game is written by the programmer through the classes associated with the various objects. Among the most used languages are C++ (used in Unreal Engine) and C# and JavaScript (used in Unity3D). These languages are portable without changes on a plurality of platforms: PC, Mac, Web, iOS and Android devices, PS3, Xbox360.

In Unity3D each GameObject has various components associated with it. One of the components is the script, which generally inherits from the MonoBehaviour class. Each script contains various functions or methods to manage the various events: start, update, particle collision management, camera movement, lighting manipulation, etc.

UnrealEngine4 uses a visual scripting system called Blueprint, which allows to define the behavior of classes and objects. In theory it is possible to develop a game without writing a line of code. However, the system allows the programmer to write code directly in C++.

1.3) Hardware Abstraction (HAL)

The game engine subsystems generally make calls to the underlying hardware level (HAL or Hardware Abstraction Layer) components such as OpenGL or DirectX. Calls are made using a standard API (Application Programming Interface). A hardware abstraction layer allows an application program to communicate with specific hardware. The main purpose of the HAL level is to hide the different hardware architectures and provide a uniform interface to the programs.

1.4) The operating system

A fundamental function of game engines is the possibility of performing multi-platform development; a game developed with a framework can be exported to a myriad of different platforms: Windows, Mac, IOS, Android, consoles, etc.

The main engines (Unreal Engine, Unity3D) have built-in easy-to-use support for publishing a game on different platforms.

1.5) Device drivers

Device drivers are software components that the operating system uses to interact with various types of devices (graphics card, sound card, modem, keyboard, mouse, joystick, etc.). The functions present in the drivers contain the specific code for the individual hardware devices that interact with the video game.

1.6) The hardware level

The hardware level represents the physical level. The hardware is the video screen that displays the images of the game, the keyboard, the joystick, the microphone, the smartphone, etc. Modern video games can be run on a variety of hardware platforms (PC and Mac, consoles, smartphones).

Conclusion

Modern game engines are indispensable tools for managing the development and testing of video games. They provide the basic reusable materials, common to all games, that the developer can use to build his game. The programmer can thus concentrate his creativity on the application part: the scene, the characters, the textures, the interaction between the objects and so on.

Several video game engines are available on the market; among the most important are Unity3D, Unreal Engine, CryEngine, OGRE3D (this one is open source). They differ in quality, ease of use, performance and price.

Bibliography

[1]Jason Gregory – Game Engine Architecture (CRC Press, 2014)

[2]Robert Nystrom – Game Programming Patterns (Genever-Benning, 2014)

[3]Eric Lengyel – Mathematics for 3D Game Programming and Computer Graphics (Course Technology, 2012)

0 Comments